Writing An Hadoop Mapreduce Program In Perl

Learn how to write Mapreduce Programs using Pig Latin. There are two components in Pig that work together in data processing. Pig Latin is the language used to express what needs to be done. An interpreter layer transforms Pig Latin programs into MapReduce or Tez jobs which are processed in Hadoop. Now I need to use this perl program on hadoop. So that the perl program will run on data chunks. Stack Overflow. How to execute a perl program inside Map Reduce in Hadoop? Browse other questions tagged java perl hadoop mapreduce hadoop-streaming.

- Hadoop Mapreduce Examples

- Hadoop Program Tutorial

- What Is Mapreduce

- Hadoop Mapreduce Interview Questions

I have a perl program which will take a input file and process it and produce an output file as result. Now I need to use this perl program on hadoop. So that the perl program will run on data chunks stored on edge nodes thing is I shouldn't modify the perl code. I didn't know how to start this . Can someone please give me any advice ir suggestions.

Can I write a java program , in the mapper class call the perl program using process builder and combine the results in reducer class ??

Is there any other way to achieve this ?

3 Answers

I believe you can do this with hadoop streaming.

As per tom white, author of hadoop definitive guide, 3rd edition. Page # 622, Appendix C.

Hadoop Mapreduce Examples

He used hadoop to execute a bash shell script as a mapper.

In your case you need to use perl script instead of that bash shell script.

Use Case: He has a lot of small files(one big tar file input), his shell script converts them into few big files(one big tar file output).

He used hadoop to process them in parallel by giving bash shell script as mapper. Therefore this mapper works with input files parallely and produce results.

example hadoop command:(copy pasted)

Hadoop Program Tutorial

Replace load_ncdc_map.sh with your xyz.perl in both places(last 2 lines in command).

Replace ncdc_files.txt with another text file which contains the list of your input files to be processed.(5th line from bottom)

Assumptions Taken: You have a fully functional hadoop cluster running and your perl script is error free.

Please try and let me know.

Process builder in any java program is used to call non-java applications or scripts. Process builder should work when called from the mapper class. You need to make sure that the perl script, the perl executable and the perl libraries are available for all mappers.

David HarrisDavid HarrisBit late to the party...

I'm about to start using Hadoop::Streaming. This seems to be the consensus module to use.

Not the answer you're looking for? Browse other questions tagged javaperlhadoopmapreducehadoop-streaming or ask your own question.

In this post, we provide an introduction to the basics of MapReduce, along with a tutorial to create a word count app using Hadoop and Java.

Join the DZone community and get the full member experience.

What Is Mapreduce

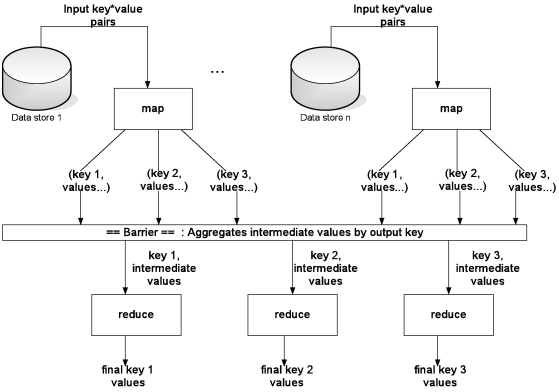

Join For FreeIn Hadoop, MapReduce is a computation that decomposes large manipulation jobs into individual tasks that can be executed in parallel across a cluster of servers. The results of tasks can be joined together to compute final results.

MapReduce consists of 2 steps:

- Map Function – It takes a set of data and converts it into another set of data, where individual elements are broken down into tuples (Key-Value pair).

- Reduce Function – Takes the output from Map as an input and combines those data tuples into a smaller set of tuples.

Example – (Map function in Word Count)

Input | Set of data | Bus, Car, bus, car, train, car, bus, car, train, bus, TRAIN,BUS, buS, caR, CAR, car, BUS, TRAIN |

Output | Convert into another set of data (Key,Value) | (Bus,1), (Car,1), (bus,1), (car,1), (train,1), (car,1), (bus,1), (car,1), (train,1), (bus,1), (TRAIN,1),(BUS,1), (buS,1), (caR,1), (CAR,1), (car,1), (BUS,1), (TRAIN,1) |

Example – (Reduce function in Word Count)

Input (output of Map function) | Set of Tuples | (Bus,1), (Car,1), (bus,1), (car,1), (train,1), (car,1), (bus,1), (car,1), (train,1), (bus,1), (TRAIN,1),(BUS,1), (buS,1), (caR,1), (CAR,1), (car,1), (BUS,1), (TRAIN,1) |

Output | Converts into smaller set of tuples | (BUS,7), (CAR,7), (TRAIN,4) |

Work Flow of the Program

Workflow of MapReduce consists of 5 steps:

Splitting – The splitting parameter can be anything, e.g. splitting by space, comma, semicolon, or even by a new line (‘n’).

Mapping – as explained above.

Intermediate splitting – the entire process in parallel on different clusters. In order to group them in “Reduce Phase” the similar KEY data should be on the same cluster.

Reduce – it is nothing but mostly group by phase.

Combining – The last phase where all the data (individual result set from each cluster) is combined together to form a result.

Hadoop Mapreduce Interview Questions

Now Let’s See the Word Count Program in Java

Fortunately, we don’t have to write all of the above steps, we only need to write the splitting parameter, Map function logic, and Reduce function logic. The rest of the remaining steps will execute automatically.

Make sure that Hadoop is installed on your system with the Java SDK.

Steps

Open Eclipse> File > New > Java Project >( Name it – MRProgramsDemo) > Finish.

Right Click > New > Package ( Name it - PackageDemo) > Finish.

Right Click on Package > New > Class (Name it - WordCount).

Add Following Reference Libraries:

Right Click on Project > Build Path> Add External

/usr/lib/hadoop-0.20/hadoop-core.jar

Usr/lib/hadoop-0.20/lib/Commons-cli-1.2.jar

5. Type the following code:

The above program consists of three classes:

- Driver class (Public, void, static, or main; this is the entry point).

- The

Mapclass which extends the public class Mapper<KEYIN,VALUEIN,KEYOUT,VALUEOUT> and implements theMapfunction. - The

Reduceclass which extends the public class Reducer<KEYIN,VALUEIN,KEYOUT,VALUEOUT> and implements theReducefunction.

6. Make a jar file

Right Click on Project> Export> Select export destination as Jar File > next> Finish.

7. Take a text file and move it into HDFS format:

To move this into Hadoop directly, open the terminal and enter the following commands:

8. Run the jar file:

(Hadoop jar jarfilename.jar packageName.ClassName PathToInputTextFile PathToOutputDirectry)

9. Open the result:

Like This Article? Read More From DZone

Opinions expressed by DZone contributors are their own.